The main purpose of the configure script.

Netmap enable zero copy with host stack install#

configure & make & sudo make install workflow. These bandwidths are on the order of 0.01 Gb/s and can easily become a new bottleneck for your code. The netmap port for linux is built and installed using the standard. In this architecture, the PCIe bus is replaced with the Unified North Bridge (UNB) that allows for faster transfers.īE AWARE that when using zero-copy semantics with the memory-mapping, that you will see absolutely horrendous bandwidths when reading a device-side buffer from the host. In the case of an APU architecture, however, the costs of data transfers using the zero-copy semantics can greatly increase the speed of transfers due to the APUs unique architecture (pictured below). For discrete graphics cards, the requests still take place over the PCIe bus, so data transfers can be slow.

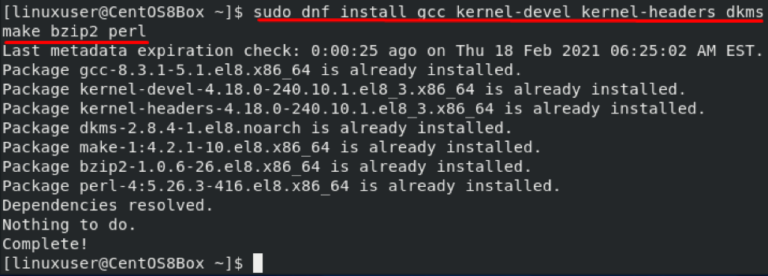

Netmap enable zero copy with host stack drivers#

However, AMDs newer drivers for OpenCL allow the data to be written directly, making the cost of mapping and unmapping almost 0. If the implementation is performing this way, then there will be no benefit to using the mapping approach. On some implementations, the calls of mapping and unmapping can hide the cost of data transfer. Using zero-copy you could be able to achieve performance over an implementation that did the following: Then, the buffers can be accessed using memory mapping semantics: void* p = clEnqueueMapBuffer(queue, buffer, CL_TRUE, CL_MAP_WRITE, 0, size, 0, NULL, NULL, &err) Įrr = clEnqueueUnmapMemObject(queue, buffer, p, 0, NULL, NULL) Additionally, we leverage existing HW capabilities (e.g., NVIDIA QPs, Intel ADQ) to facilitate isolation between processes. You can perform zero-copy by creating buffers with the following flags: CL_MEM_AMD_PERSISTENT_MEM //Device-Resident MemoryĬL_MEM_ALLOC_HOST_PTR // Host-Resident Memory cilitates zero-copy I/O while isolating kernel memory from the user. The basic premise is that you can access either the host memory from the device, or the device memory from the host without needing to do an intermediate buffering step in between. You are correct in your understanding of how zero-copy works.

Or the only advantage is that we can avoid copies c2 & c1/c3 in above situations? The GPU/CPU is accessing System/GPU-RAM directly, without explicit copy.Ģ-What is the advantage of having this? PCI-e is still limiting the over all bandwidth.

PCI-E \ \PCI-E, GPU directly accessing System memory.

| / / copy c3, c2 is avoided, indicated by X. I am a little bit confused about how exactly zero-copy work.ġ- Want to confirm that the following corresponds to zero-copy in opencl.Ĭ2 |X /PCI-E, CPU directly accessing GPU memory

0 kommentar(er)

0 kommentar(er)